Athey, Susan, Julie Tibshirani, and Stefan Wager. 2019. “Generalized Random Forests.”

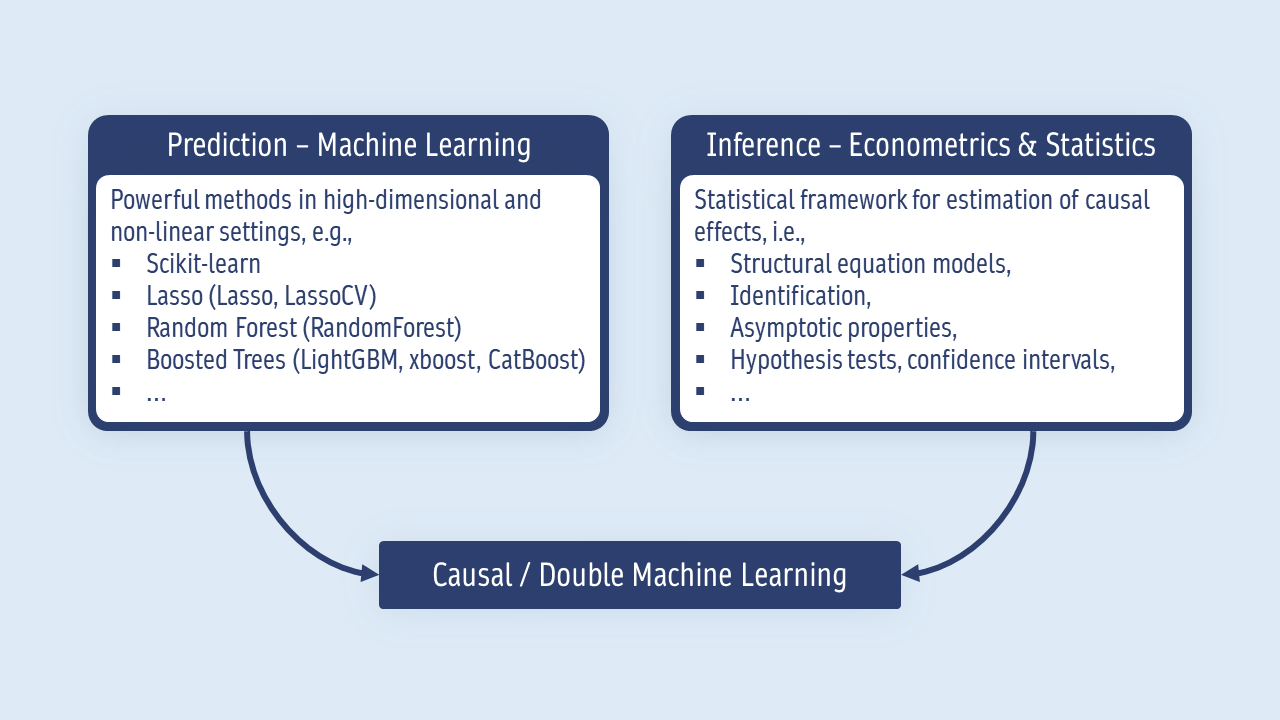

Bach, Philipp, Victor Chernozhukov, Sven Klaassen, Malte S Kurz, and Martin Spindler. 2021.

“DoubleML – An Object-Oriented Implementation of Double Machine Learning in R.” https://arxiv.org/abs/2103.09603.

Bach, Philipp, Victor Chernozhukov, Malte S Kurz, and Martin Spindler. 2021. “DoubleML – An Object-Oriented Implementation of Double Machine Learning in Python.” arXiv Preprint arXiv:2104.03220.

Belloni, Alexandre, Victor Chernozhukov, and Christian Hansen. 2014. “Inference on Treatment Effects After Selection Among High-Dimensional Controls.” The Review of Economic Studies 81 (2): 608–50.

Chernozhukov, Victor, Denis Chetverikov, Mert Demirer, Esther Duflo, Christian Hansen, Whitney Newey, and James Robins. 2018.

“Double/Debiased Machine Learning for Treatment and Structural Parameters.” The Econometrics Journal 21 (1): C1–68.

https://onlinelibrary.wiley.com/doi/abs/10.1111/ectj.12097.

Chernozhukov, Victor, Whitney K. Newey, Victor Quintas-Martinez, and Vasilis Syrgkanis. 2022.

“RieszNet and ForestRiesz: Automatic Debiased Machine Learning with Neural Nets and Random Forests.” https://arxiv.org/abs/2110.03031.

Shi, Claudia, David M. Blei, and Victor Veitch. 2019.

“Adapting Neural Networks for the Estimation of Treatment Effects.” https://arxiv.org/abs/1906.02120.