Abadie, Alberto. 2005. “Semiparametric Difference-in-Differences Estimators.” The Review of Economic Studies 72 (1): 1–19.

Bach, Philipp, Victor Chernozhukov, Carlos Cinelli, Lin Jia, Sven Klaasen, Nils Skotara, and Martin Spindler. 2024. “Sensitivity Analysis for Causal Machine Learning – a Tutorial.”

Bach, Philipp, Sven Klaassen, Kueck Jannis, Mara Mattes, and Martin Spindler. 2024. “Double Machine Learning and Sensitivity Analysis for Difference in Differences Models.”

Callaway, Brantly. 2023. “Difference-in-Differences for Policy Evaluation.” Handbook of Labor, Human Resources and Population Economics, 1–61.

Callaway, Brantly, and Pedro HC Sant’Anna. 2021. “Difference-in-Differences with Multiple Time Periods.” Journal of Econometrics 225 (2): 200–230.

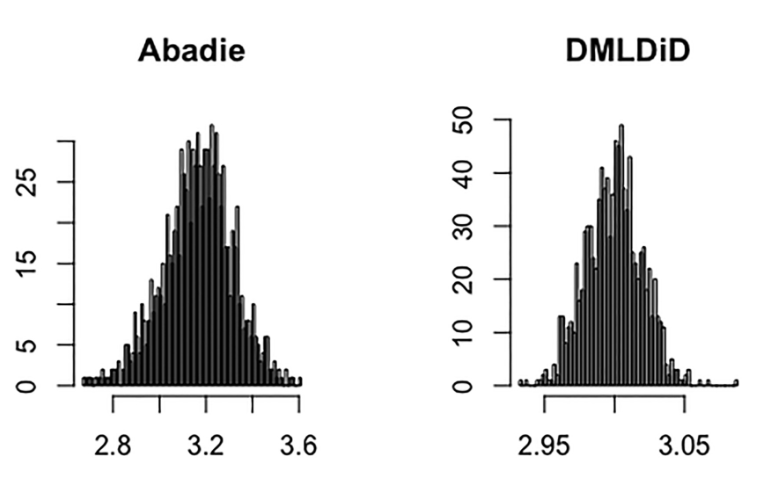

Chang, Neng-Chieh. 2020. “Double/Debiased Machine Learning for Difference-in-Differences Models.” The Econometrics Journal 23 (2): 177–91.

Chernozhukov, Victor, Denis Chetverikov, Mert Demirer, Esther Duflo, Christian Hansen, Whitney Newey, and James Robins. 2018.

“Double/Debiased Machine Learning for Treatment and Structural Parameters.” The Econometrics Journal 21 (1): C1–68.

https://onlinelibrary.wiley.com/doi/abs/10.1111/ectj.12097.

Chernozhukov, Victor, Carlos Cinelli, Whitney Newey, Amit Sharma, and Vasilis Syrgkanis. 2022. “Long Story Short: Omitted Variable Bias in Causal Machine Learning.” National Bureau of Economic Research.

Cinelli, Carlos, and Chad Hazlett. 2020. “Making Sense of Sensitivity: Extending Omitted Variable Bias.” Journal of the Royal Statistical Society Series B: Statistical Methodology 82 (1): 39–67.

Cunningham, Scott. 2021. Causal Inference: The Mixtape. Yale university press.

De Chaisemartin, Clément, and Xavier d’Haultfoeuille. 2023. “Two-Way Fixed Effects and Differences-in-Differences with Heterogeneous Treatment Effects: A Survey.” The Econometrics Journal 26 (3): C1–30.

Manski, Charles F, and John V Pepper. 2018. “How Do Right-to-Carry Laws Affect Crime Rates? Coping with Ambiguity Using Bounded-Variation Assumptions.” Review of Economics and Statistics 100 (2): 232–44.

Rambachan, Ashesh, and Jonathan Roth. 2023.

“A More Credible Approach to Parallel Trends.” The Review of Economic Studies 90 (5): 2555–91.

https://doi.org/10.1093/restud/rdad018.

Roth, Jonathan, Pedro HC Sant’Anna, Alyssa Bilinski, and John Poe. 2023. “What’s Trending in Difference-in-Differences? A Synthesis of the Recent Econometrics Literature.” Journal of Econometrics.

Sant’Anna, Pedro HC, and Jun Zhao. 2020. “Doubly Robust Difference-in-Differences Estimators.” Journal of Econometrics 219 (1): 101–22.

Zimmert, Michael. 2018. “Efficient Difference-in-Differences Estimation with High-Dimensional Common Trend Confounding.” arXiv Preprint arXiv:1809.01643.